Vista Center for the Blind and Visually impaired was hosting a lecture series; the last presenter was a representative for Google. My brother, Daniel, and I attended with the express purpose of listening to, and meeting with, the Google representative. Our mission was to find solutions to the challenges Daniel experiences with technology, specifically using his phone, due to his cerebral palsy and vision impairment. We envisioned a phone where Daniel could independently and accurately call, text, email, watch tv, etc. on his phone.

After the conference, we spoke with the Google representative, Charles Chen which initiated our dialogue with Google. We subsequently collaborated on assistive technology products with Google over the next six years that would not only further Daniel’s independence, but also many others with disabilities.

In May 2012, Charles Chen asked us to identify specific tasks and priorities for Daniel to use his phone.With Google, we then tried/tested different phones, (iPhone, Samsung Galaxy iterations) as well as different Google operating systems (Ice Cream Sandwich, Jeallybean) and different accessories (NFC Tags to identify objects, Talkback speaking app, Vilngo speaking app, bluetooth controllers including a game console and headset). Charles provided me technical literature so I could understand programming, such as App inventor. We met at the Google campus and ate with members of the Disability Team, and ‘Googlers’ who have disabilities. In May 2013, we met Jorge Silva of Tecla-allows quadriplegics to use Electronics.He helped Daniel test a joystick used to navigate the iPhone. It is a wonderful product that works well for individuals with sight, yet limited hand movement. Because of Daniel’s low vision, it was not applicable for his specific needs.

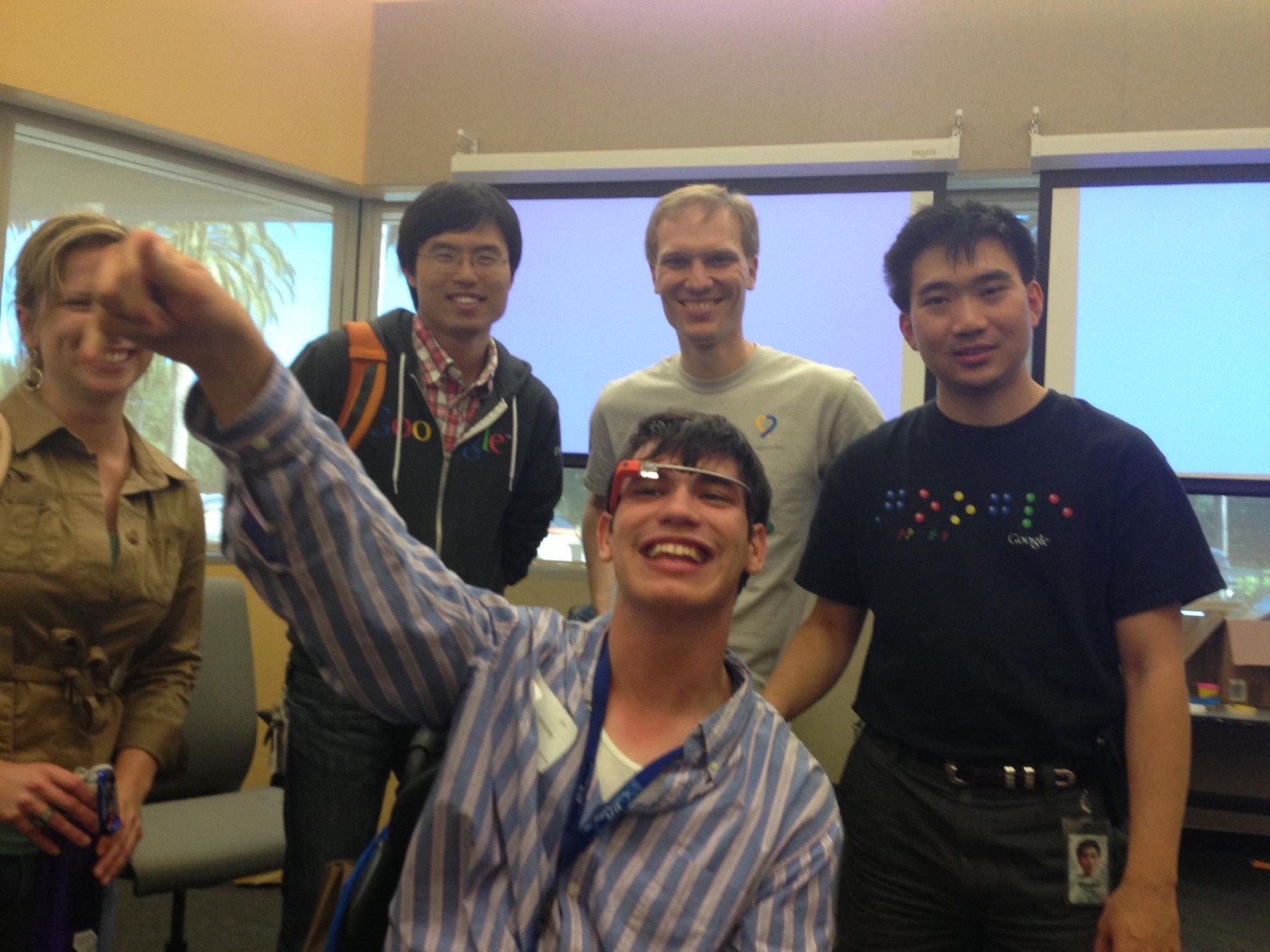

In June 2013, we attended an internal design workshop at Google intended to help educate engineers and product managers about the accessibility needs of users. The workshop paired groups of engineers and product mangers with actual users with disabilities, to promote ideas how to improve products’ accessibility features. We developed features for the future Google-Glass that would enable independence. Daniel wanted the ability to turn his phone on where myself or others could see where he was geographically, to provide him visual feedback and direction on where to go/ what to do.Today, Google has an incredible program/app named Be My Eyes. The app connects sight volunteers with visually impaired users who need help identifying locations or specific tasks.

Charles Chen then introduced me to Astrid Weber, a Google UX researcher, conducting a Google Dragon Naturally Speaking user study. After Google completed the study, we attended a UX meeting to create a video capturing Daniel’s daily life. I was put in charge of identifying/selecting set locations, individuals involved, complying with all legal requirements to film on location and with individuals being filmed, and create events for the day.

Will Moran, one of the original ‘Googlers’ and I conversed on Monday morning, October 27, 2014, the day of the shoot. He shared his vision that new technologies will be invented because Daniel’s video will give engineers insight into daily life challenges that confront people with disabilities. The film was one of six videos originally shown internally to engineers and product managers at Google. The six videos were so well received, Google.org made disabilities their non-profit impact challenge and donated $20 million dollars to unconventional technologies in the disability space.

A few years later, I was at MIT and by chance, came upon a wheelchair lab, designing low-cost wheelchairs for developing nations. While in discussion with the lab manager/engineer about the testing equipment, he asked about my involvement with disabilities. I told him about the Google video and its impact. Turns out, this MIT engineer had been a recipient of the Google Disabilities Impact Challenge, to continue his work.

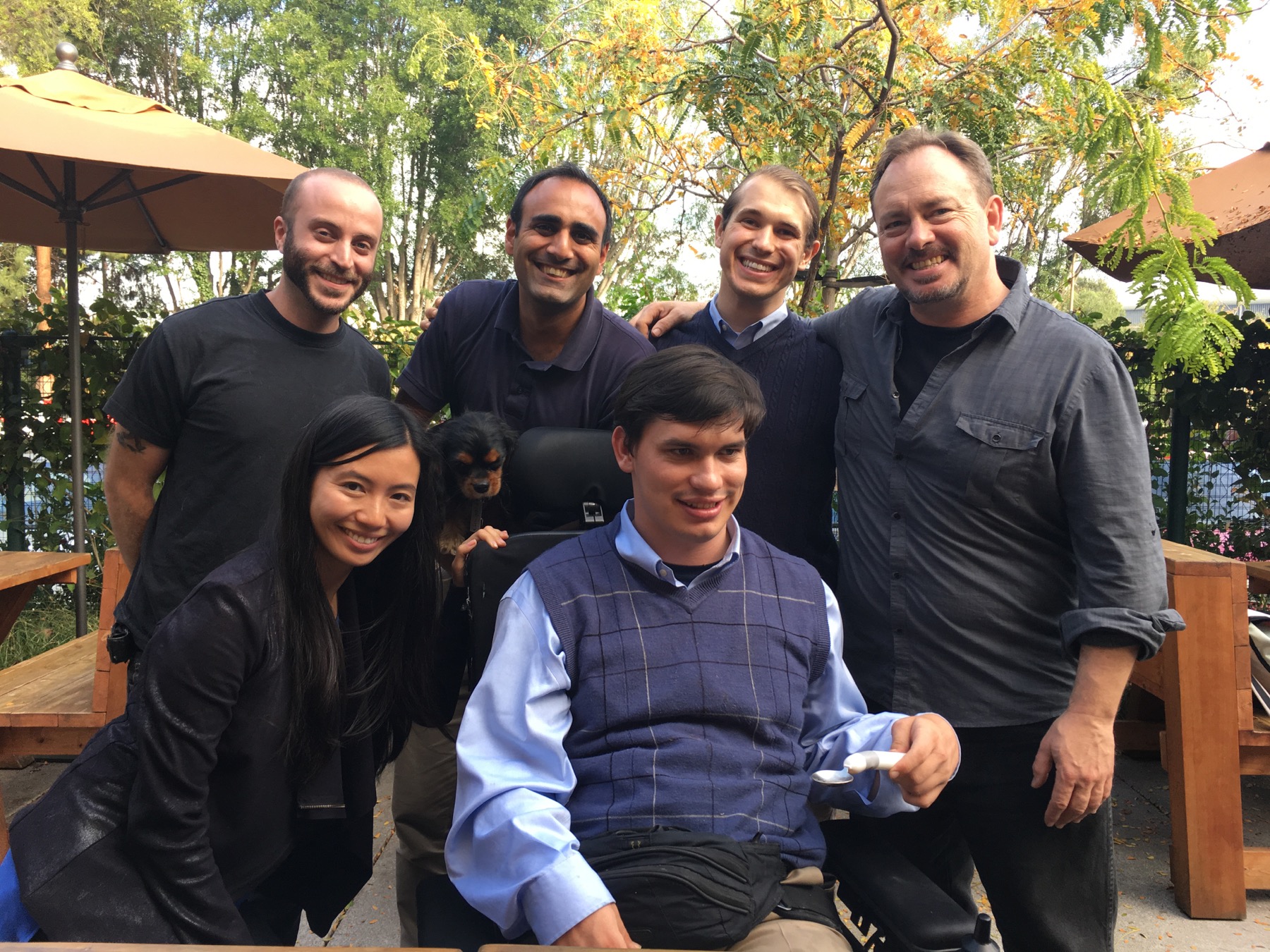

In April 2015, Astrid Weber introduced us to Anupam Pathak who worked in the Life Science division now called Verily. We started the process of testing / improving a self-stabilizing spoon to promote independence when eating. We participated in clinical studies. After each prototype and subsequent design iteration, I would document Daniel’s eating experience, so when a glitch occurred or an improvement could be made, there was a visual component with my written assessment. For the first time, Daniel was able to eat soup and cereal independently, without spilling.It was a unique experience attending Google design meetings, testing, and documenting, then repeating the process. We explored creative ideas of how to use this technology for other applications, including painting, makeup, drill bits and other accessory attachments. In early November 2016, an internal Google newsletter illustrated Daniel using the spoon attachment, as well as a painting attachment.

Daniel participated in the filming of the product before launch, interacting with media outlets and providing one face of many, who benefited from eating independently because of the self-stabilizing spoon, branded “Liftware Level”. My design suggestion to add a magnetic strap was incorporated into the final product, which launched on Dec 2, 2016. The Liftware video has been seen by millions worldwide, and translated into multiple languages.

The years spent inside Google design processes, and integrally interacting with accessible design engineers, was not only educational, but also a major inspiration for me to pursue Stanford’s Master’s in Design Impact Engineering. It is a mission for my life, not just a degree.